Bio: I am a faculty member at CISPA Helmholtz Center for Information Security, where I lead the AIR-ML Lab. Prior to this, I earned my PhD in Computer Science at the University of Virginia, where I was advised by Prof. David Evans. I also hold an MS degree in Statistics from the University of Virginia and a BS degree in Mathematics and Applied Mathematics from Tsinghua University. I am a member of the European Laboratory for Learning and Intelligent Systems, affiliated with ELLIS Unit Saarbrücken.

Research Interest: My research spans a broad range of topics in machine learning (ML), with a primary focus on trustworthy AI, encompassing robustness, safety, privacy, bias, and interpretability. I’m also interested in deep learning theory, generative modeling, and optimization. Ultimately, my goal is to develop principled adversarial ML approaches to tackle the fundamental challenges in building reliable and trustworthy AI systems.

I am always looking for self-motivated students interested in machine learning research, including PhD students, HiWis, intern and visiting students. An up-to-date list of my publications is available on Google Scholar. Please visit our lab website for more information about our current research directions and available positions.

Featured Publications

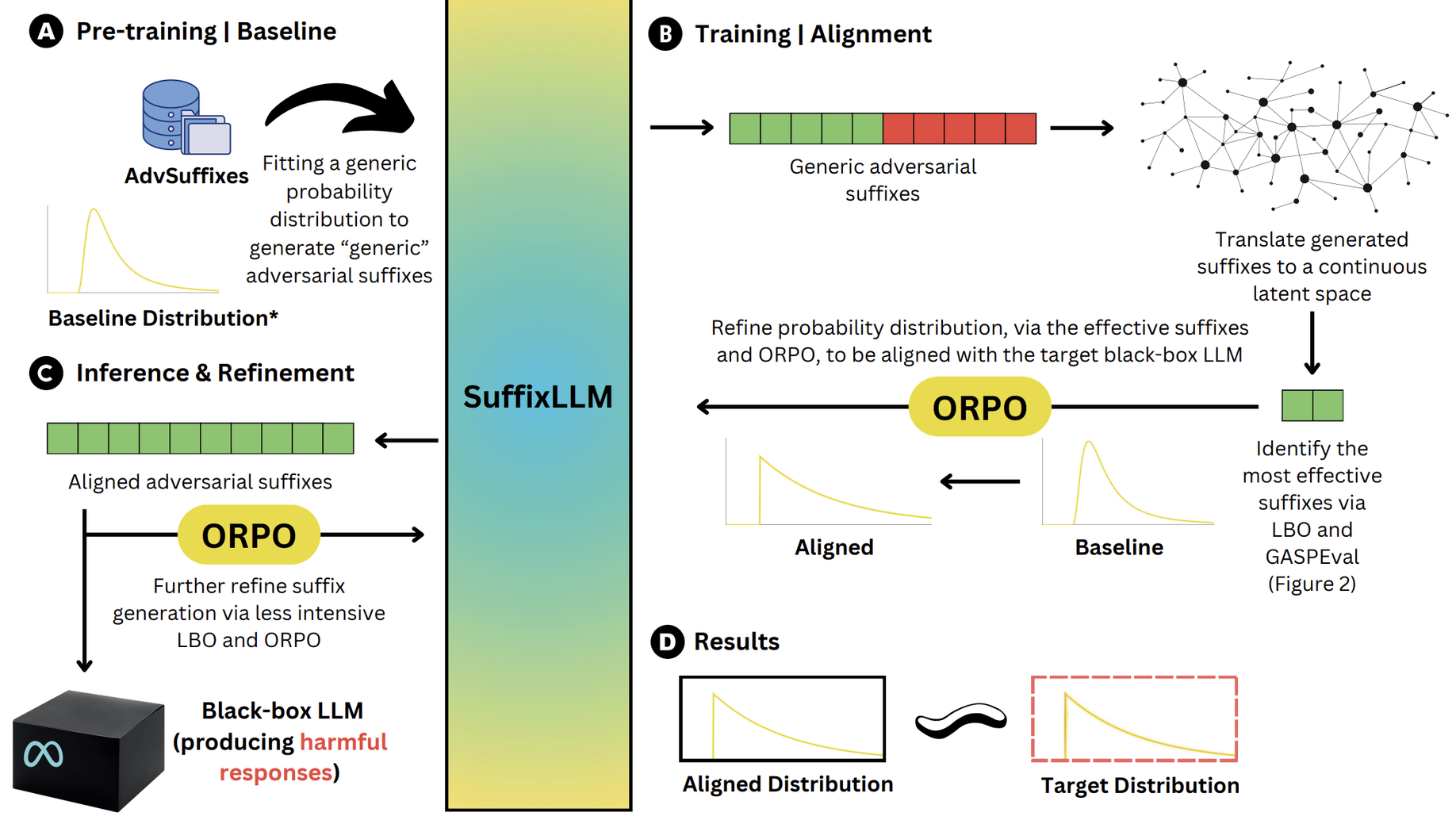

GASP: Efficient Black-Box Generation of Adversarial Suffixes for Jailbreaking LLMs

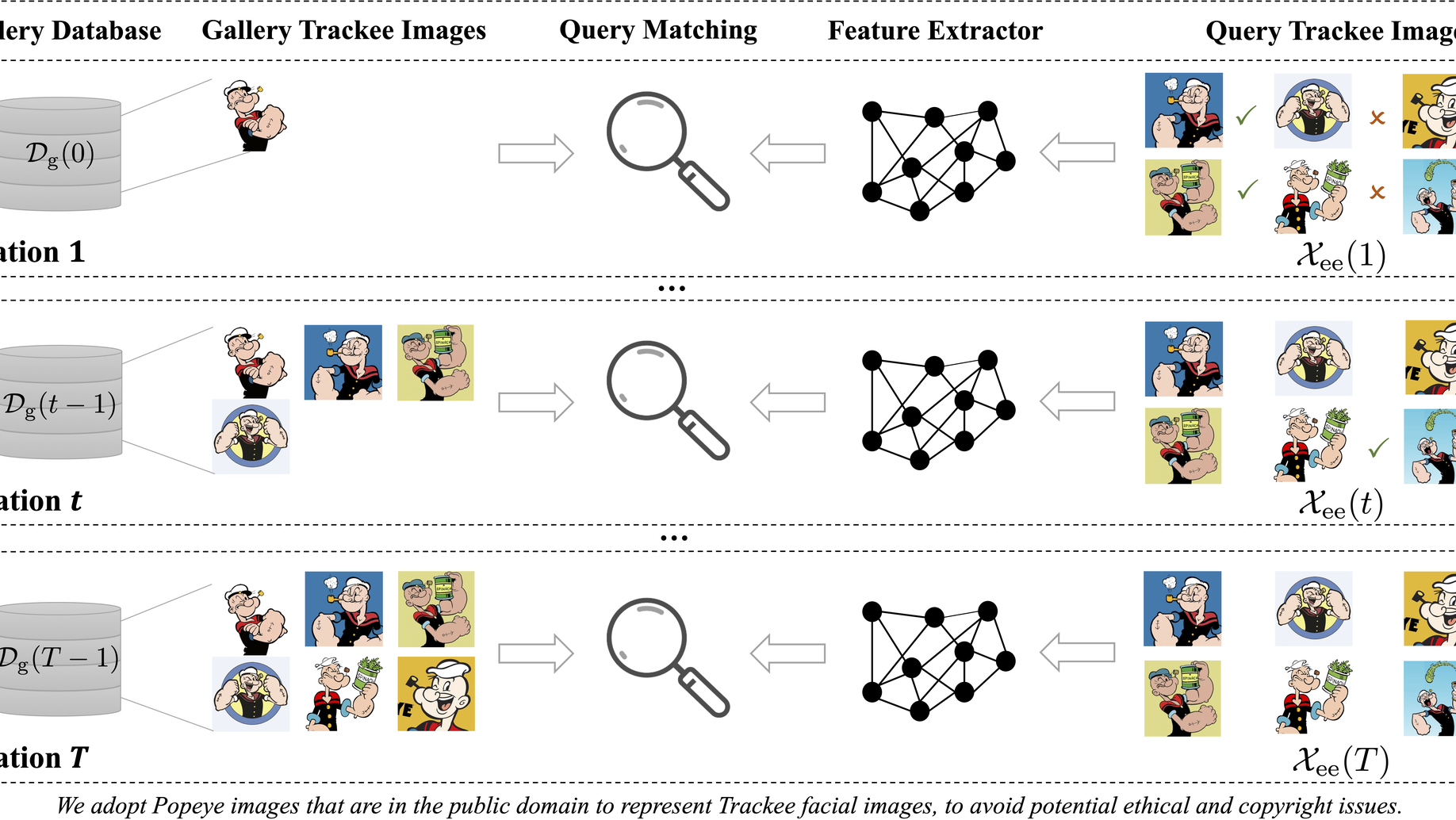

DivTrackee versus DynTracker: Promoting Diversity in Anti-Facial Recognition against Dynamic FR Strategy

Do Parameters Reveal More than Loss for Membership Inference?

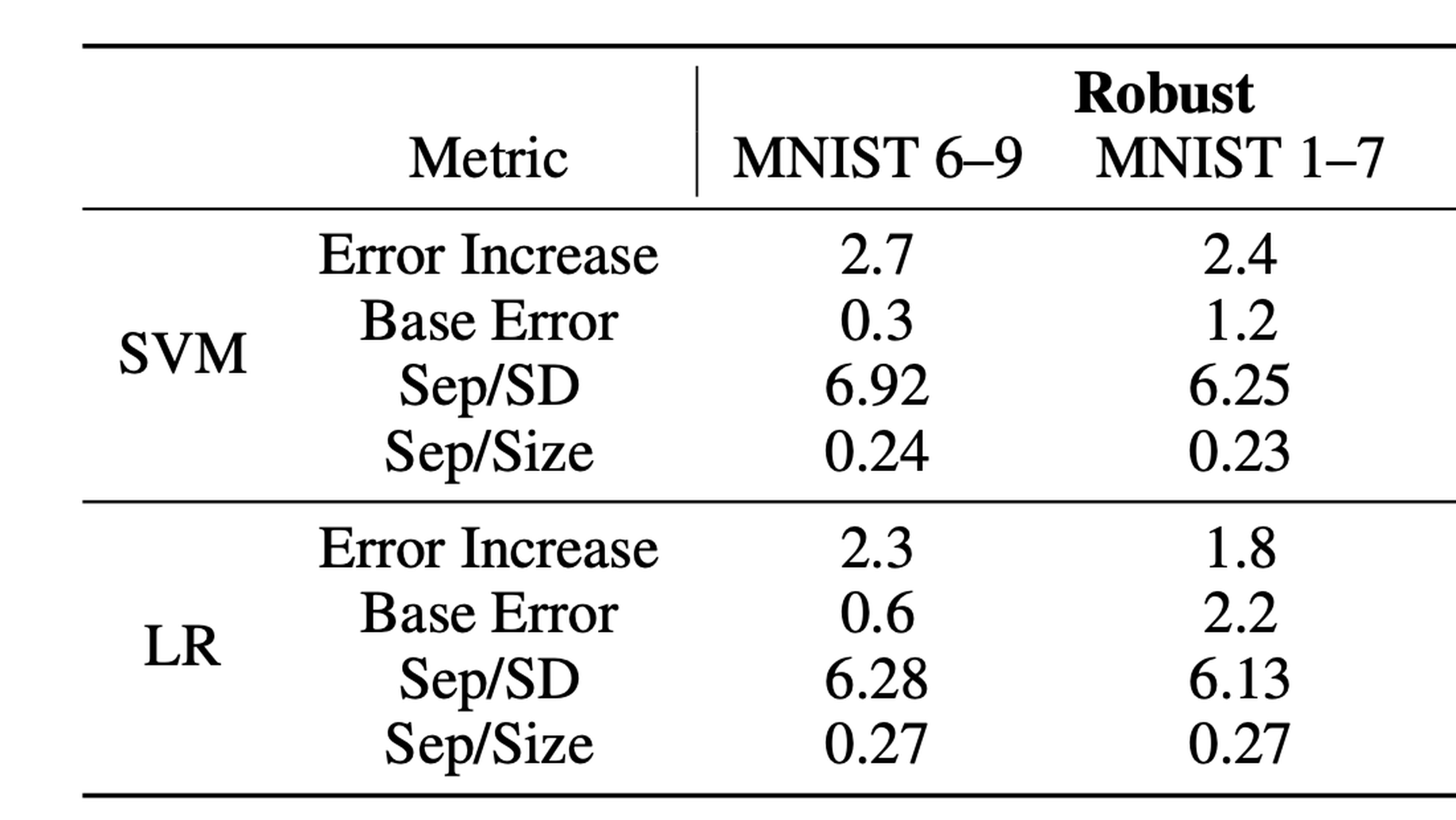

What Distributions are Robust to Indiscriminate Poisoning Attacks for Linear Learners?

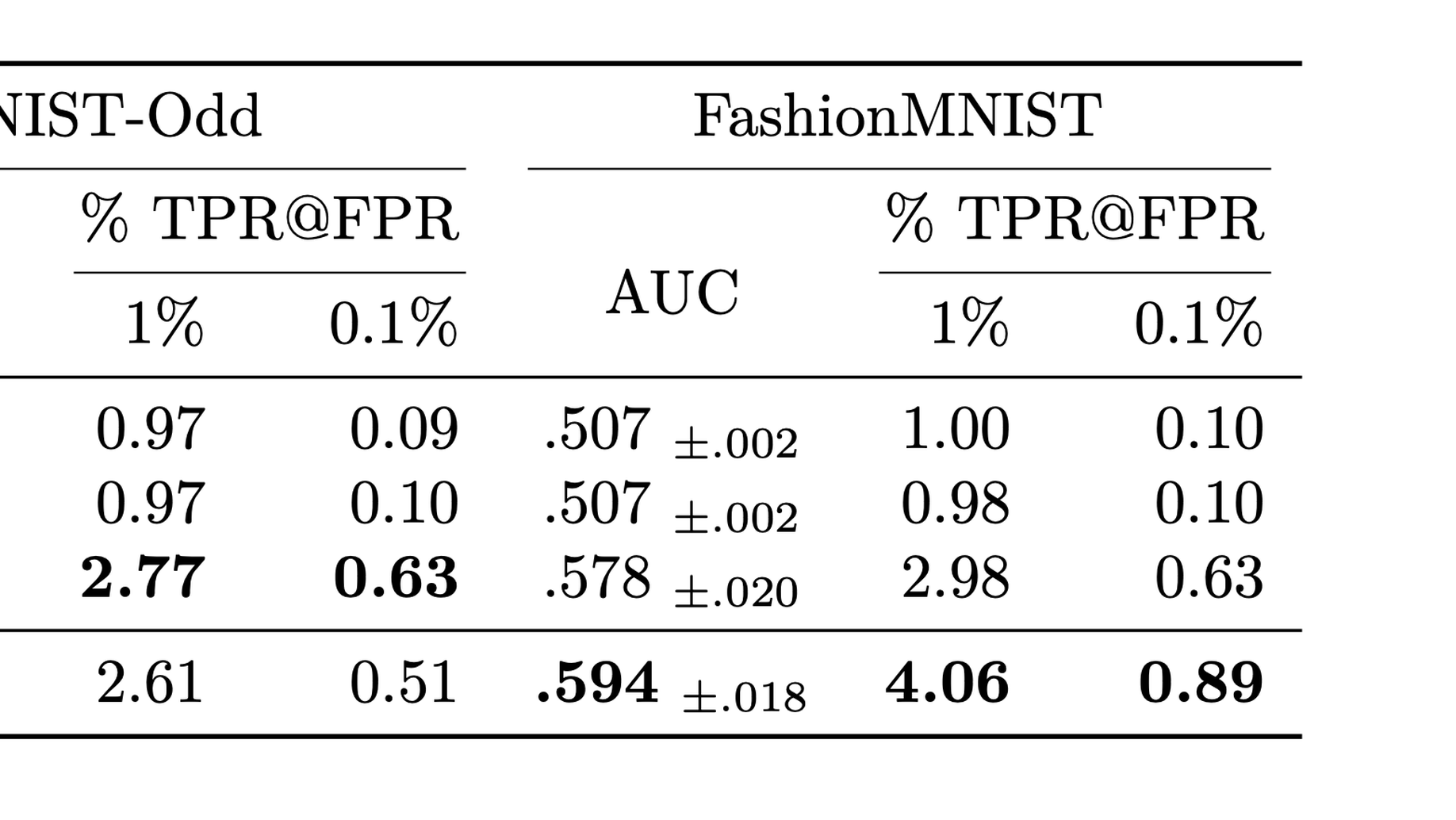

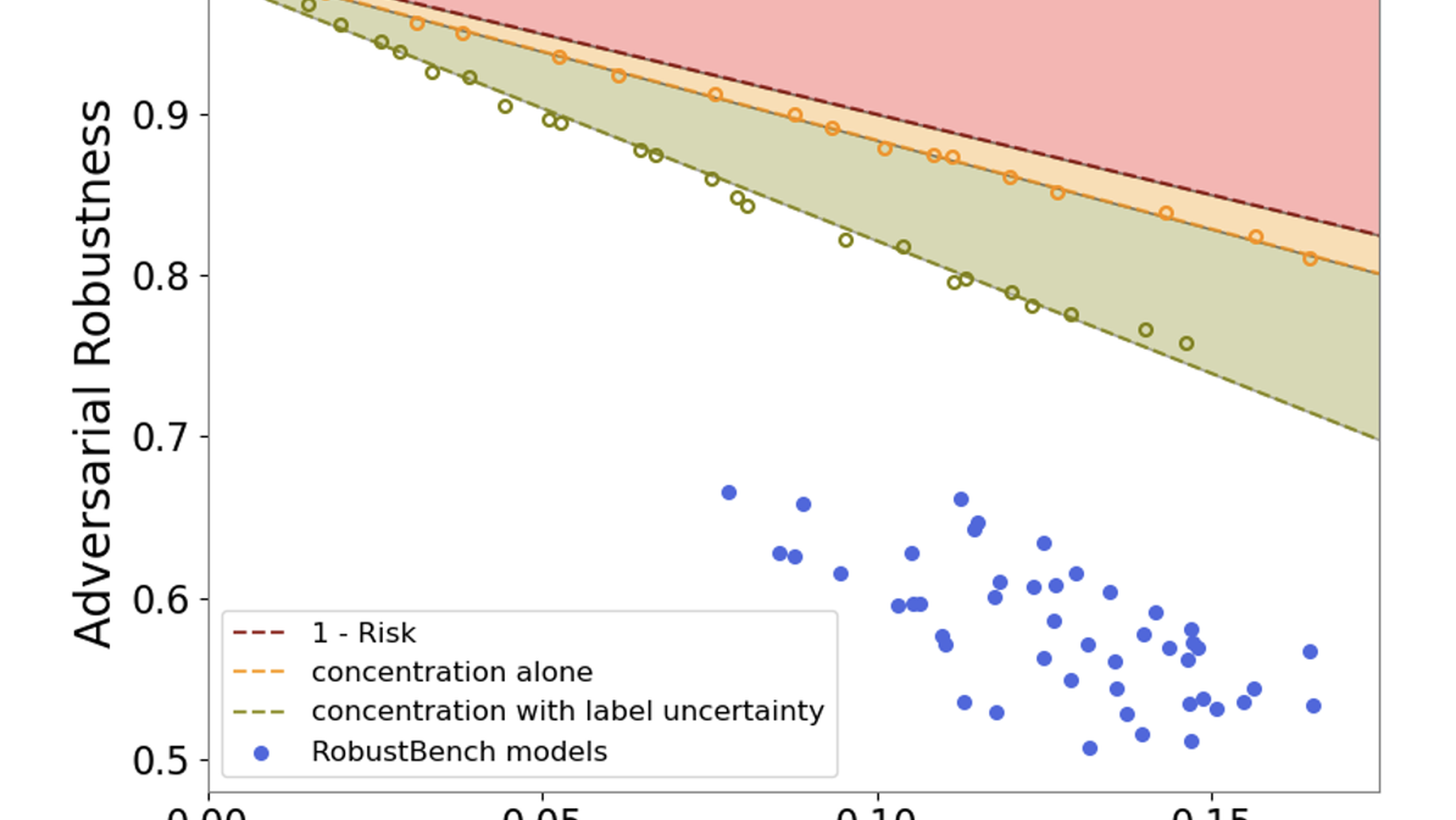

Understanding Intrinsic Robustness using Label Uncertainty

Publications

Contact

- xiao.zhang@cispa.de

- +49 681 87083 2342

- Im oberen Werk 1, St. Ingbert, Saarland 66386

- My office is Room 0.28 at CISPA D2 building